Long-term Temporal Convolutions for Action Recognition

People

Gül Varol Gül Varol |

Ivan Laptev Ivan Laptev |

Cordelia Schmid Cordelia Schmid |

Abstract

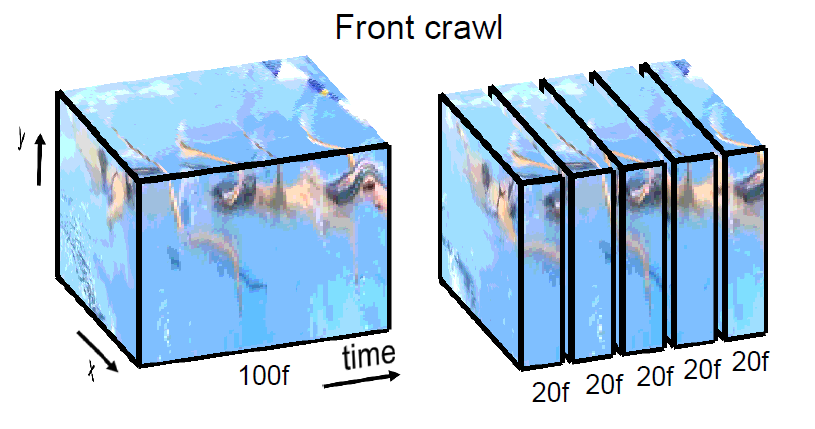

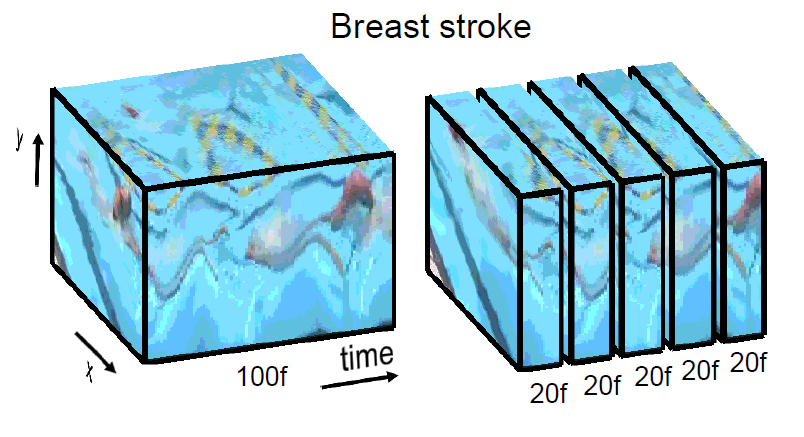

Typical human actions last several seconds and exhibit characteristic spatio-temporal structure. Recent methods attempt to capture this structure and learn action representations with convolutional neural networks. Such representations, however, are typically learned at the level of a few video frames failing to model actions at their full temporal extent. In this work we learn video representations using neural networks with long-term temporal convolutions (LTC). We demonstrate that LTC-CNN models with increased temporal extents improve the accuracy of action recognition. We also study the impact of different low-level representations, such as raw values of video pixels and optical flow vector fields and demonstrate the importance of high-quality optical flow estimation for learning accurate action models. We report state-of-the-art results on two challenging benchmarks for human action recognition UCF101 (92.7%) and HMDB51 (67.2%).

Paper

BibTeX

@ARTICLE{varol18_ltc,

title = {Long-term Temporal Convolutions for Action Recognition},

author = {Varol, G{\"u}l and Laptev, Ivan and Schmid, Cordelia},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

year = {2018},

volume = {40},

number = {6},

pages = {1510--1517},

doi = {10.1109/TPAMI.2017.2712608}

}

Long- versus short-term temporal networks

The video below shows 5 filters for layers 3, 4, 5 of 16f and 100f networks on UCF101. For each filter, there are 6 test videos which produce the top activations. The location of the maximum activation on a video is denoted with a green circle both in space and time. The support of this location gets larger in higher layers of the network. For visibility purposes, the 16-frame videos are played with 3 fps and 100-frame videos are played with 12 fps. Originally the videos are of 25 fps. The arrow indicates which filter is being shown. For more details and interpretation, refer to the arXiv report.

Acknowledgements

This work was supported by the ERC starting grant ACTIVIA, the ERC advanced grant ALLEGRO, Google and Facebook Research Awards and the MSR-Inria joint lab.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright.