Paper

V. Delaitre, I. Laptev and J. Sivic

Recognizing human actions in still images: a study of bag-of-features and part-based representations

Proceedings of the 21st British Machine Vision Conference, Aberystwyth, September 2010, poster

PDF | Abstract | BibTeX | Poster

Recognizing human actions in still images: a study of bag-of-features and part-based representations

Proceedings of the 21st British Machine Vision Conference, Aberystwyth, September 2010, poster

PDF | Abstract | BibTeX | Poster

Abstract

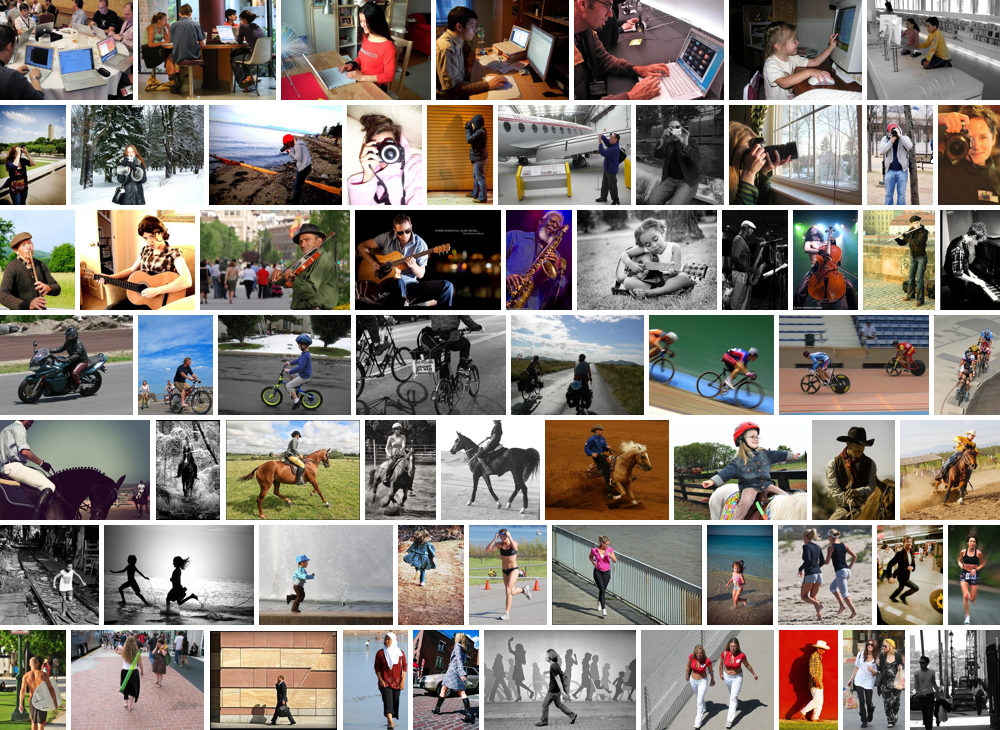

Recognition of human actions is usually addressed in the scope of video interpretation. Meanwhile, common human actions such as “reading a book”, “playing a guitar” or “writing notes” also provide a natural description for many still images. In addition, some actions in video such as “taking a photograph” are static by their nature and may require recognition methods based on static cues only. Motivated by the potential impact of recognizing actions in still images and the little attention this problem has received in computer vision so far, we address recognition of human actions in consumer photographs. We construct a new dataset with seven classes of actions in 968 Flickr images representing natural variations of human actions in terms of camera view-point, human pose, clothing, occlusions and scene background. We study action recognition in still images using the state-of-the-art bag-of-features methods as well as their combination with the part-based Latent SVM approach of Felzenszwalb et al. In particular, we investigate the role of background scene context and demonstrate that improved action recognition performance can be achieved by (i) combining the statistical and part-based representations, and (ii) integrating person-centric description with the background scene context. We show results on our newly collected dataset of seven common actions as well as demonstrate improved performance over existing methods on the datasets of Gupta et al. and Yao and Fei-Fei.BibTeX

@InProceedings{Delaitre10,

author = "Delaitre, V. and Laptev, I. and Sivic, J.",

title = "Recognizing human actions in still images: a study of bag-of-features and part-based representations",

booktitle = bmvc,

note = "updated version, available at http://www.di.ens.fr/willow/research/stillactions/",

year = "2010",

}

Code

Dataset