P-CNN: Pose-based CNN Features for Action Recognition

Abstract

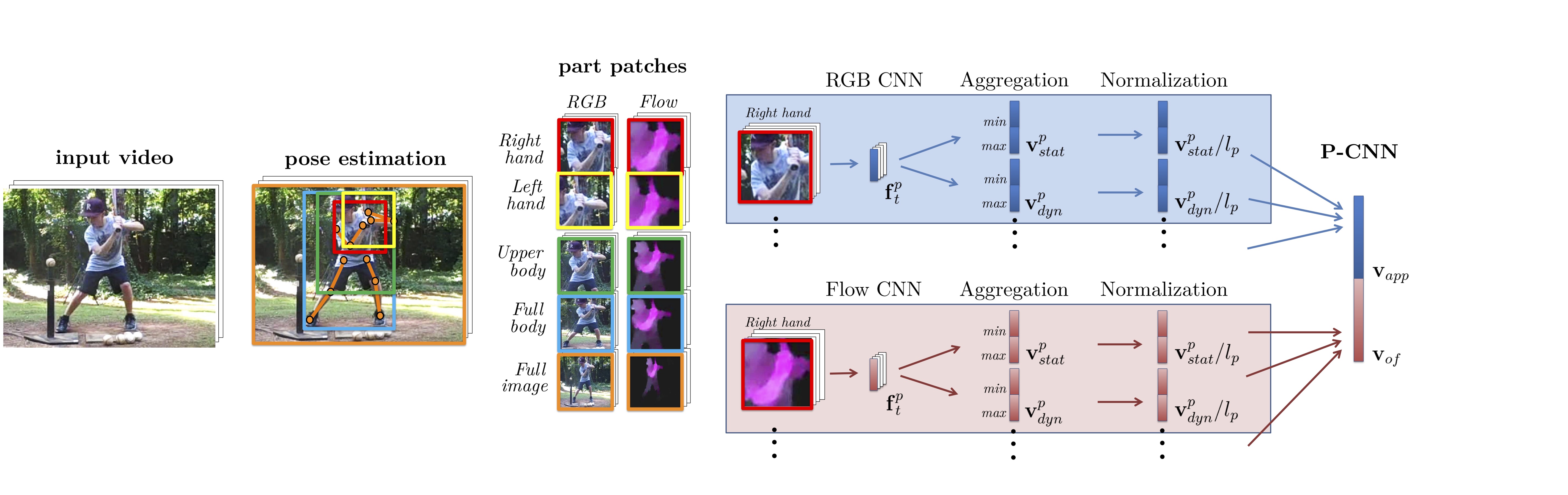

This work targets human action recognition in video. While recent methods typically represent actions by statistics of local video features, here we argue for the importance of a representation derived from human pose. To this end we propose a new Pose-based Convolutional Neural Network descriptor (P-CNN) for action recognition. The descriptor aggregates motion and appearance information along tracks of human body parts. We investigate different schemes of temporal aggregation and experiment with P-CNN features obtained both for automatically estimated and manually annotated human poses. We evaluate our method on the recent and challenging JHMDB and MPII Cooking datasets. For both datasets our method shows consistent improvement over the state of the art.

Paper

BibTeX

@inproceedings{cheronICCV15,

TITLE = {{P-CNN: Pose-based CNN Features for Action Recognition}},

AUTHOR = {Ch{\'e}ron, Guilhem and Laptev, Ivan and Schmid, Cordelia},

BOOKTITLE = {ICCV},

YEAR = {2015},

}

Code

Matlab code to compute P-CNN.

CNN models

MPII Cooking estimated joint positions

Poster

P-CNN posterSpotlight

P-CNN spotlightAcknowledgements

This work was supported by the MSR-Inria joint lab, a Google Research Award, the ERC grant Activia and the ERC advanced grant ALLEGRO.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright.