Predicting Actions from Static Scenes

People

- Tuan-Hung VU

- Catherine Olsson

- Ivan Laptev

- Aude Oliva

- Josef Sivic

Abstract

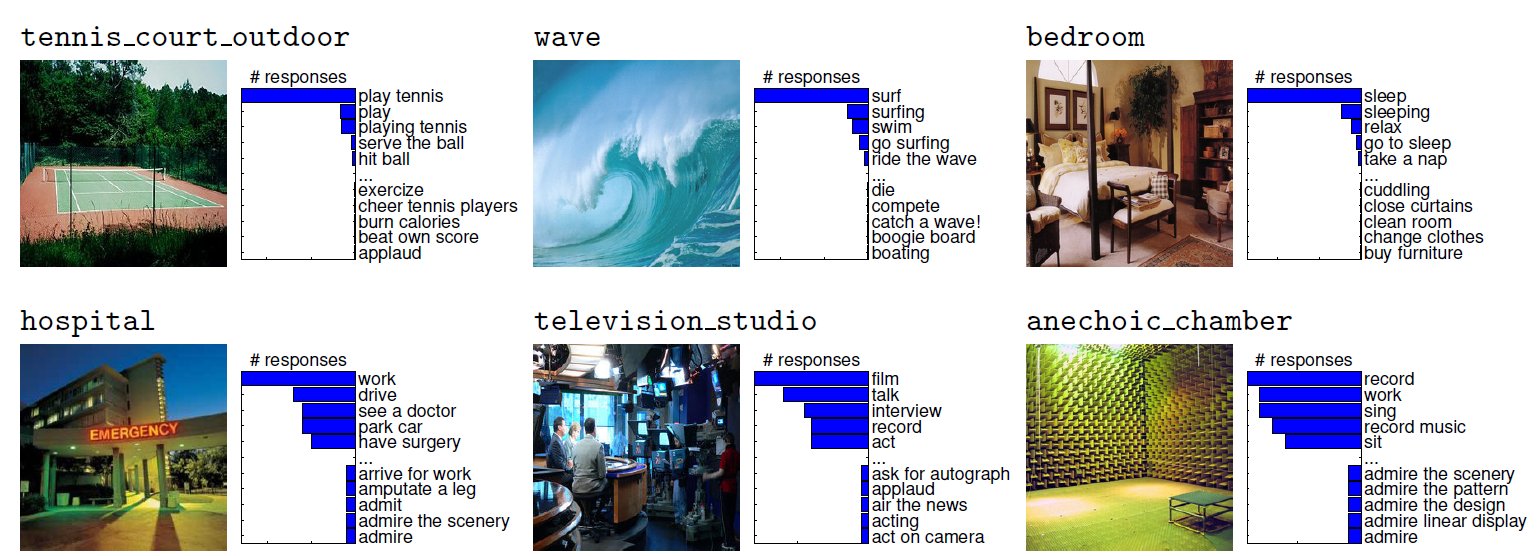

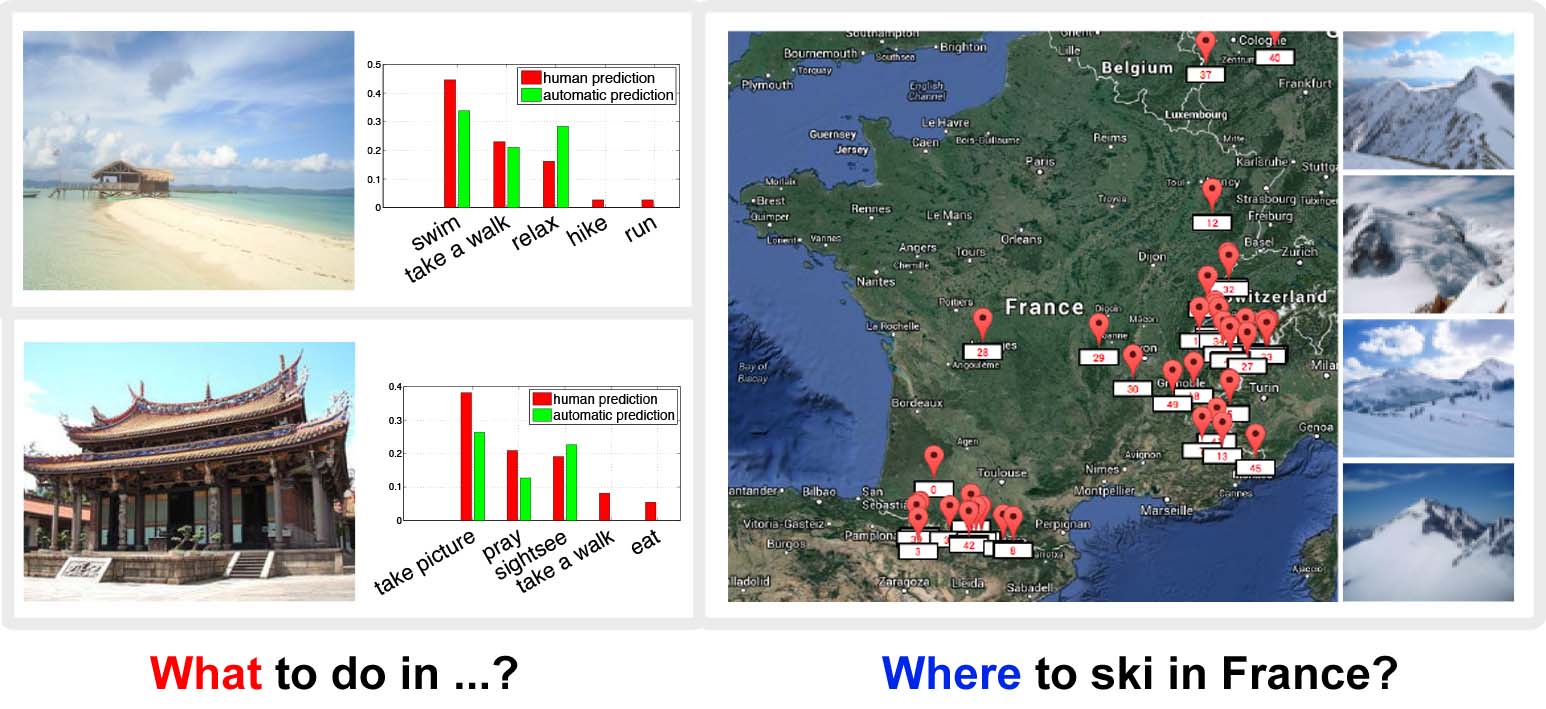

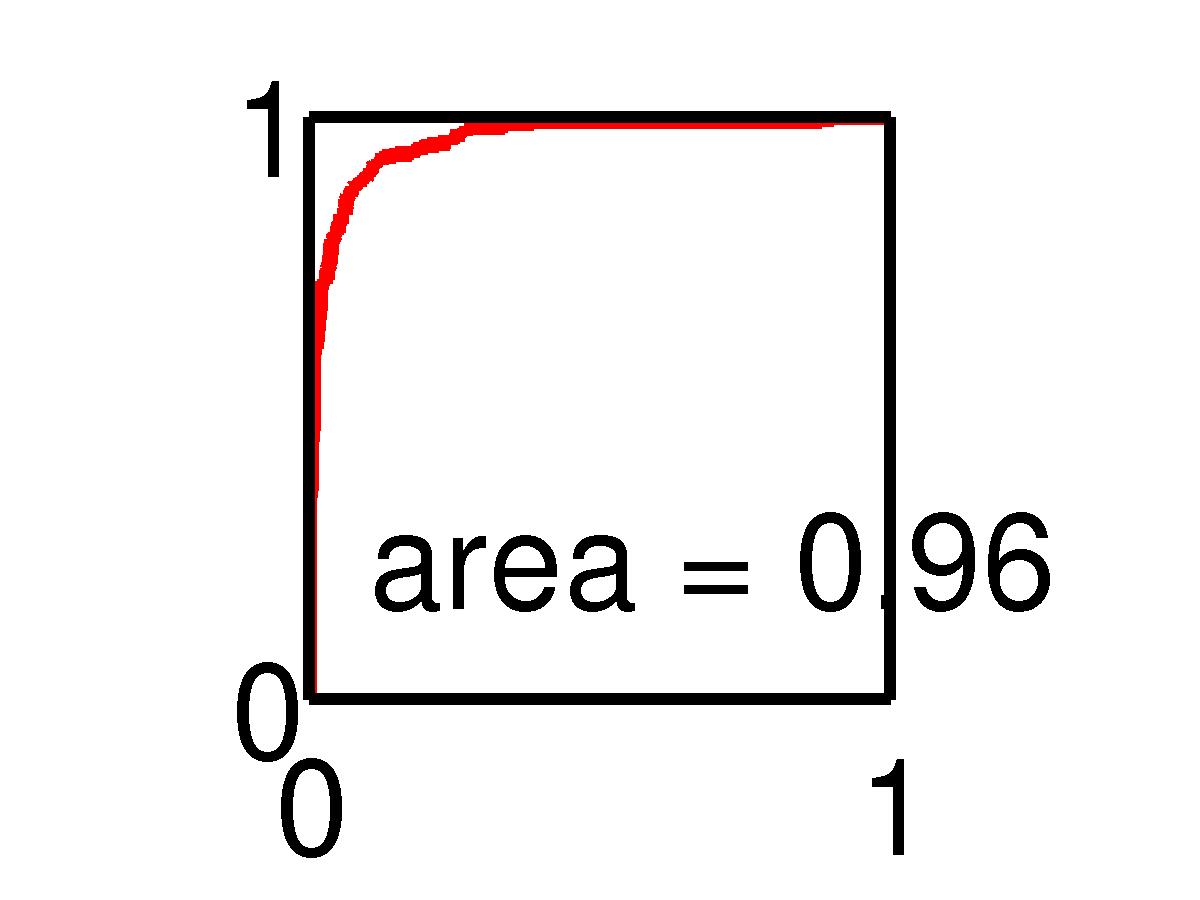

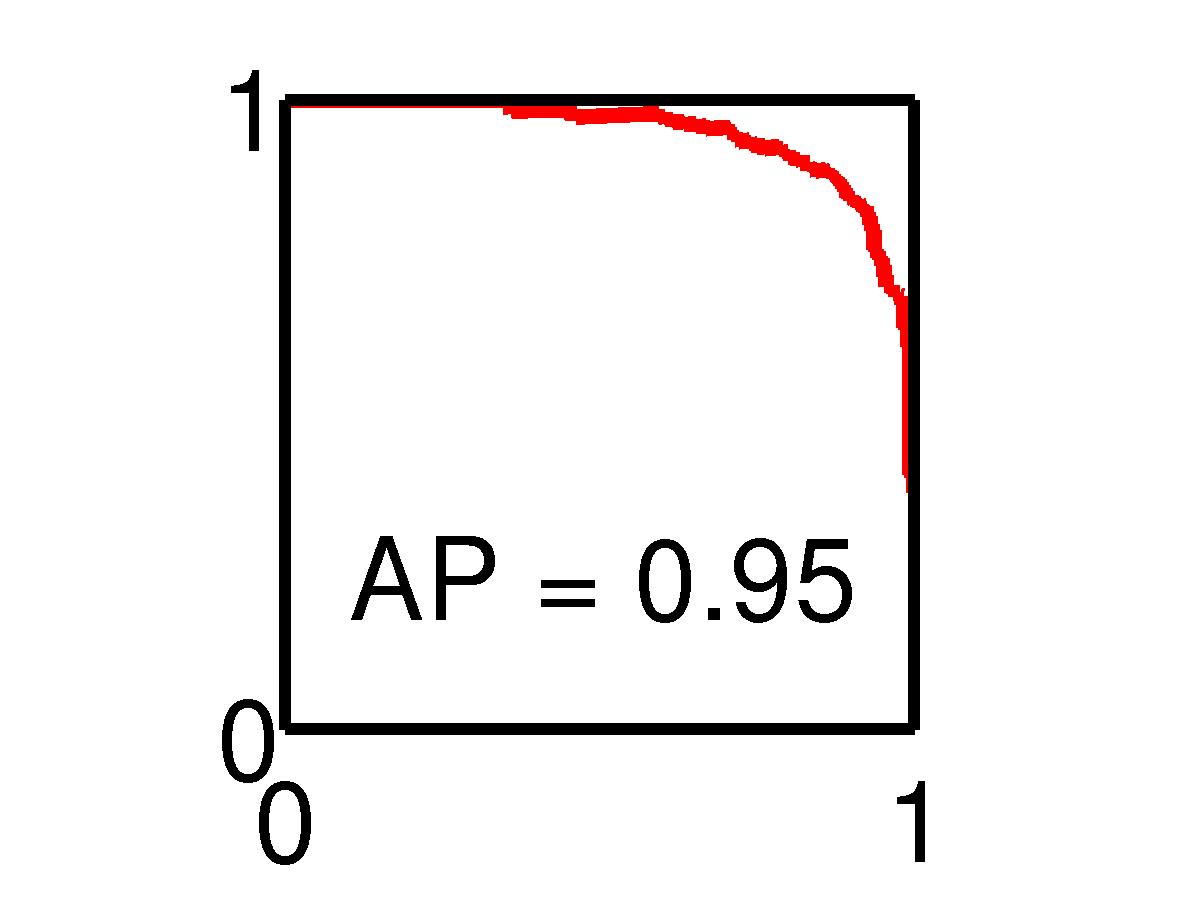

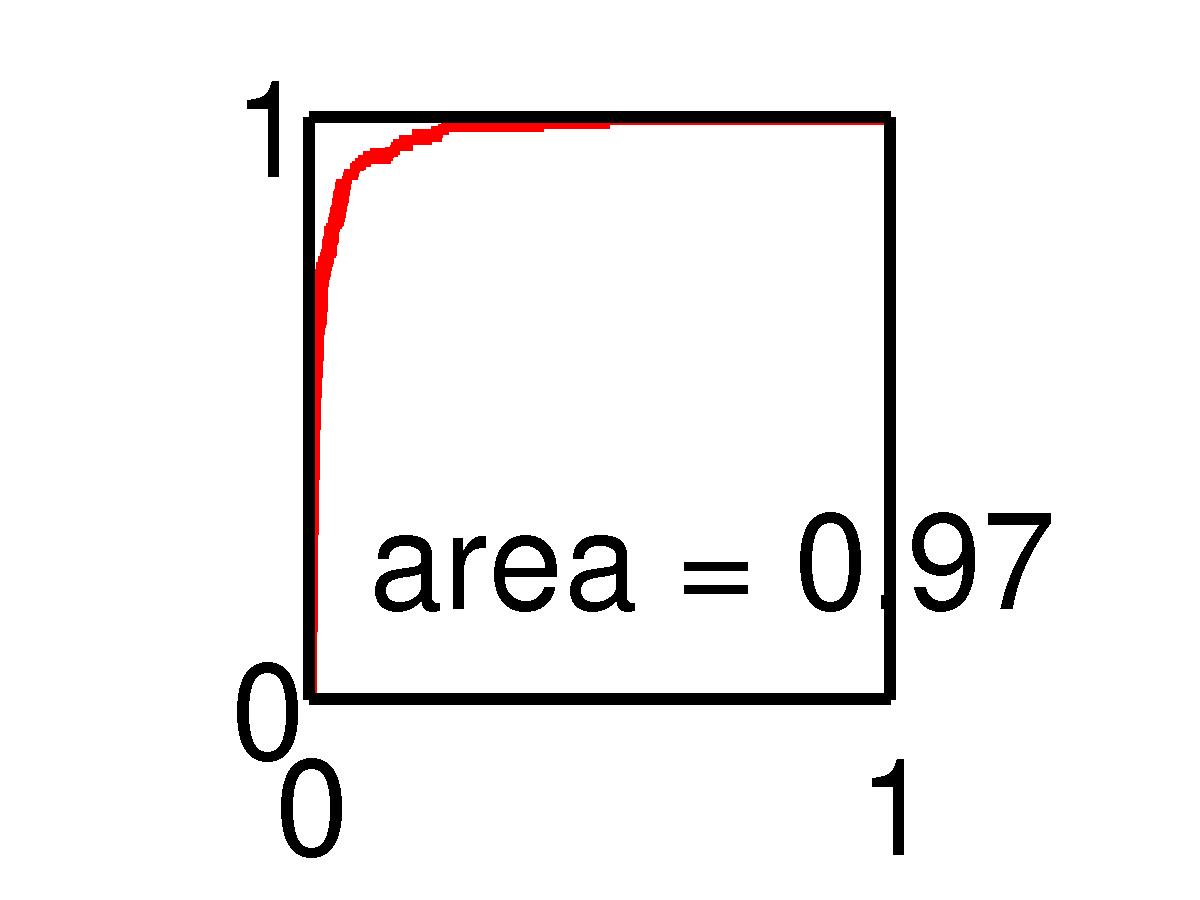

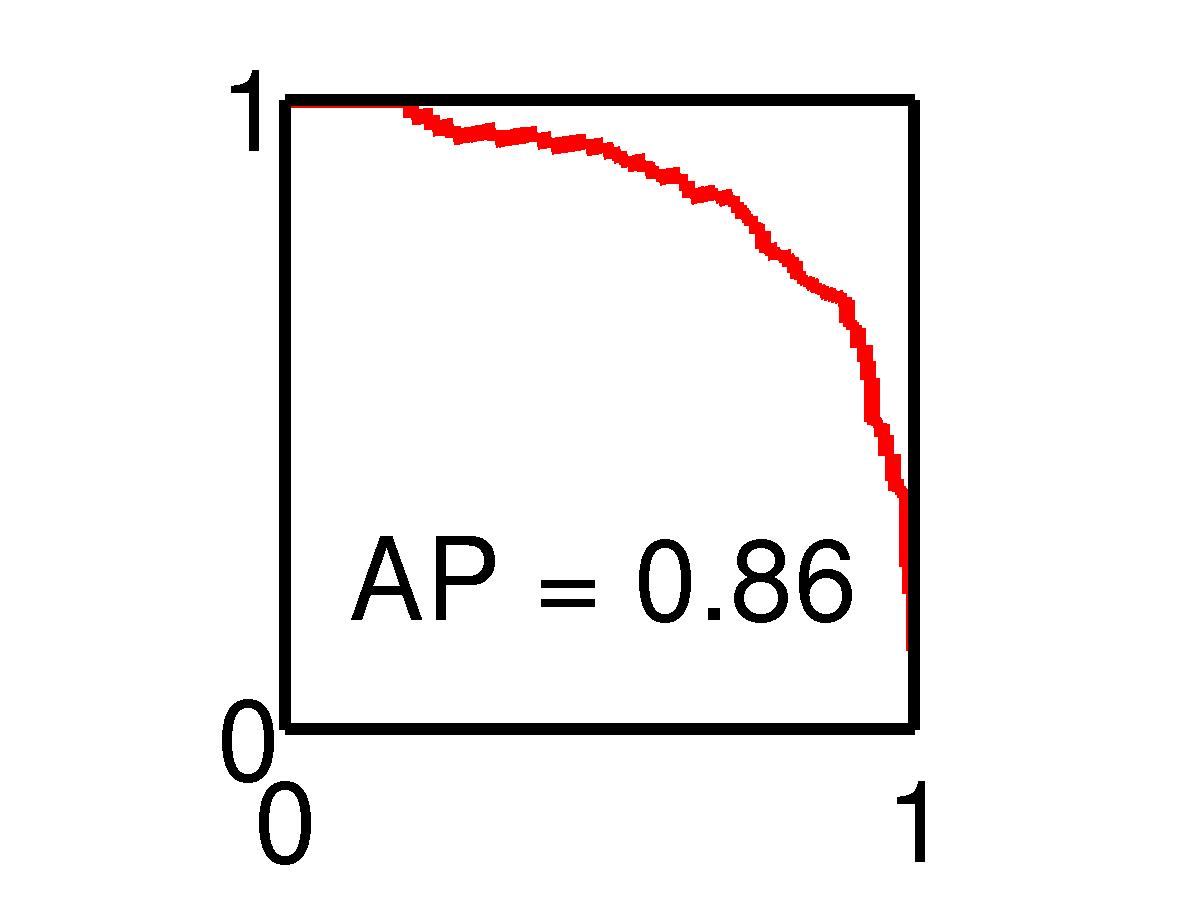

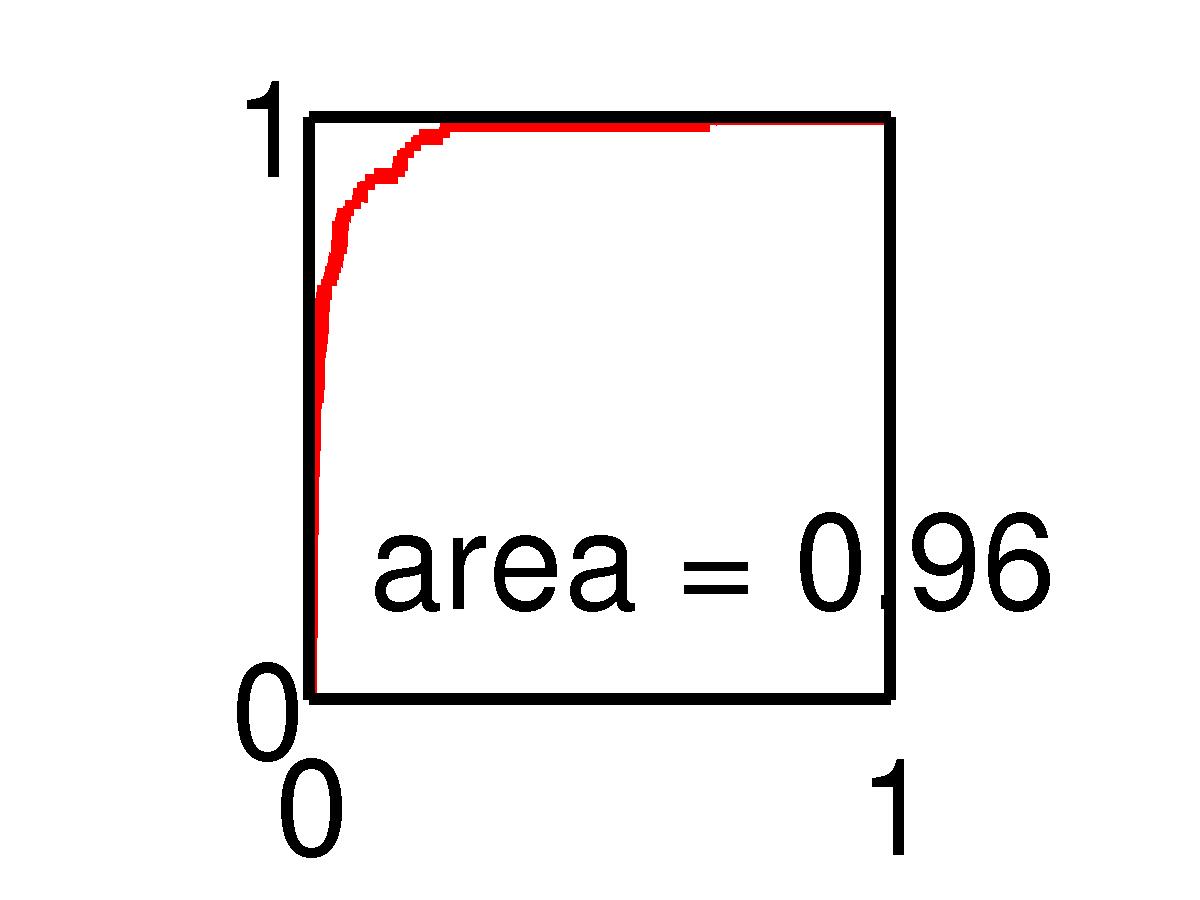

Human actions naturally co-occur with scenes. In this work we aim to discover action-scene correlation for a large number of scene categories and to use such correlation for action prediction. Towards this goal, we collect a new SUN Action dataset with manual annotations of typical human actions for 397 scenes. We next discover action-scene associations and demonstrate that scene categories can be precisely identified from their associated actions. Using discovered associations, we address a new task of predicting human actions for images of static scenes. Automatic prediction of 38 action classes on 194 outdoor scene categories, and 23 action classes on 203 indoor scene categories show promising results. We also propose a new application of geo-localized action prediction and demonstrate ability of our method to automatically answer queries such as “Where cycle along this path?”.

Paper

BibTeX

@inproceedings{VuTH14,

author = "VU, T.H. and Olsson, C. and Laptev, I. and Oliva, A. and Sivic, J.",

title = "Predicting Actions from Static Scenes",

booktitle = "ECCV",

year = "2014",

}

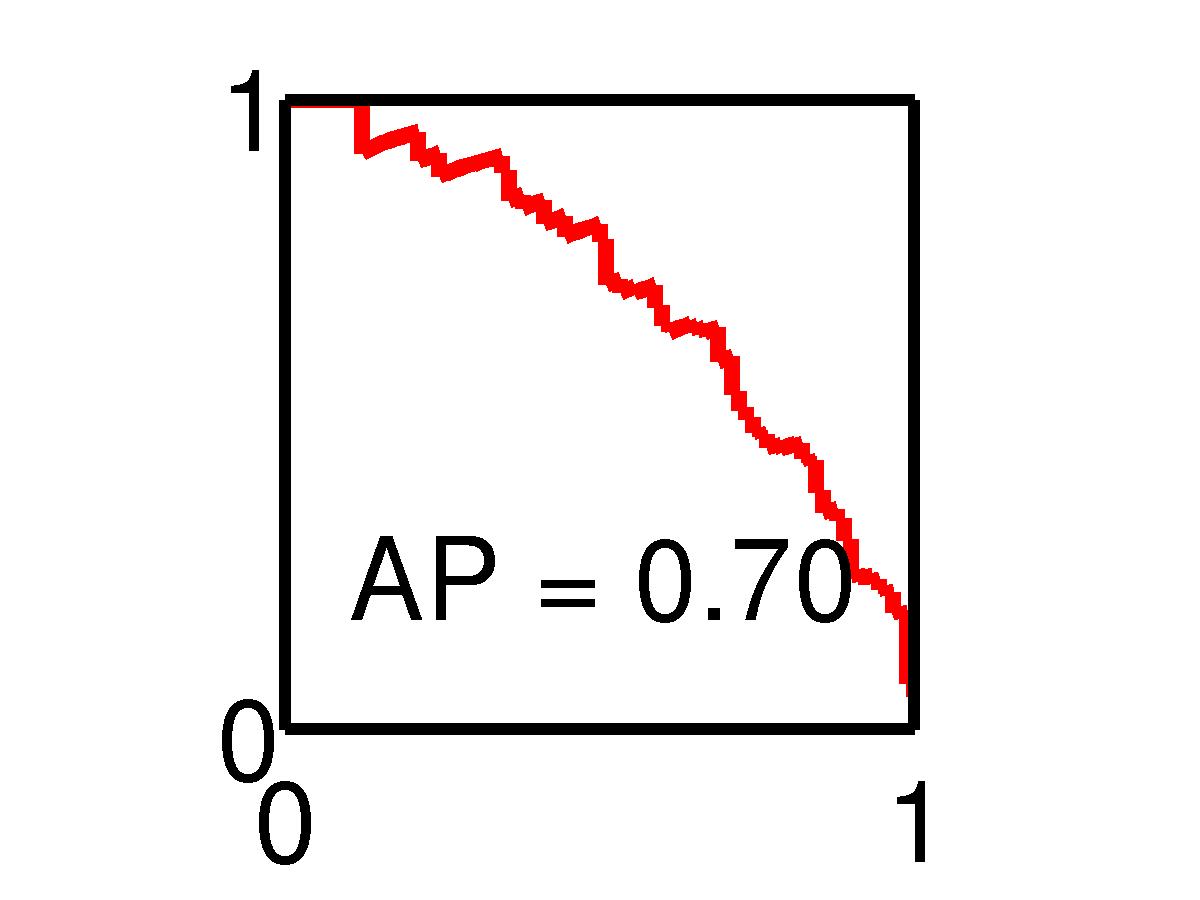

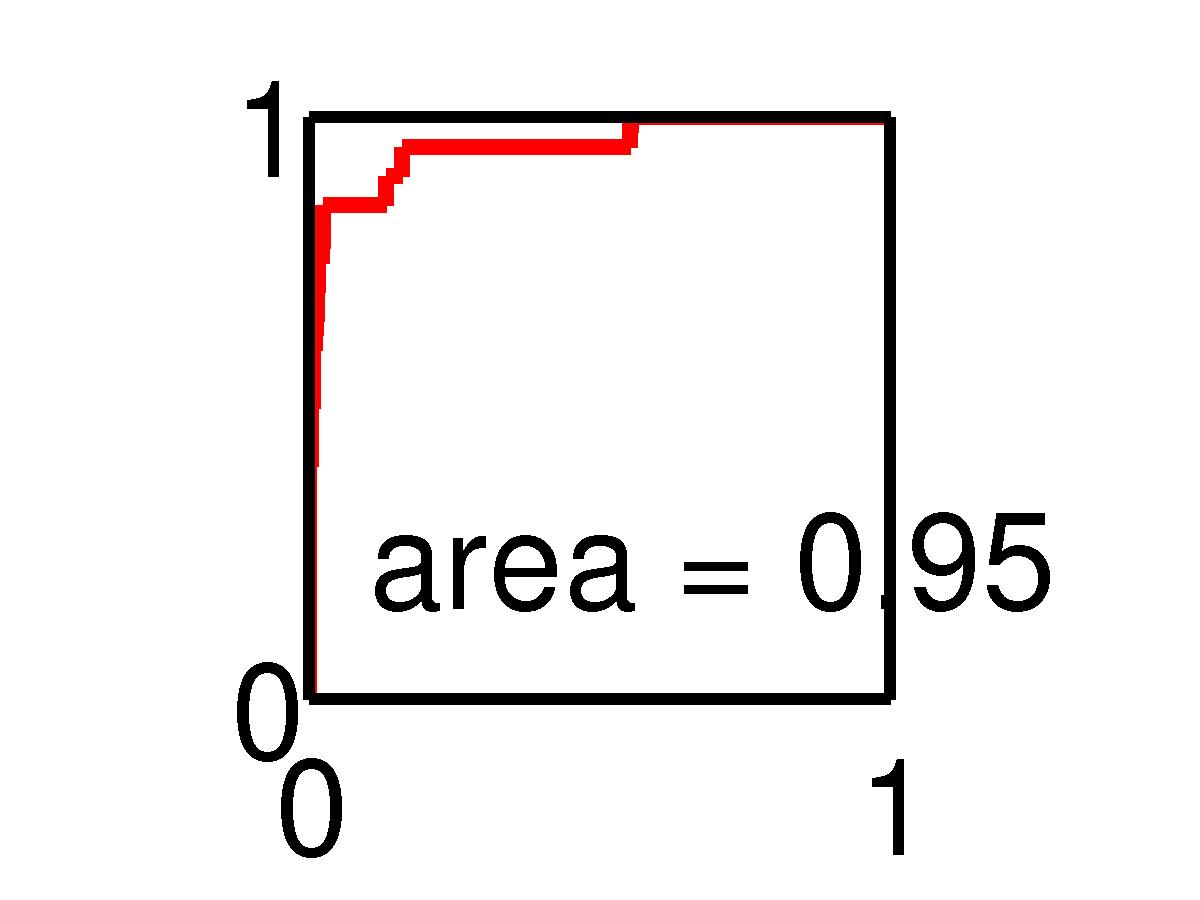

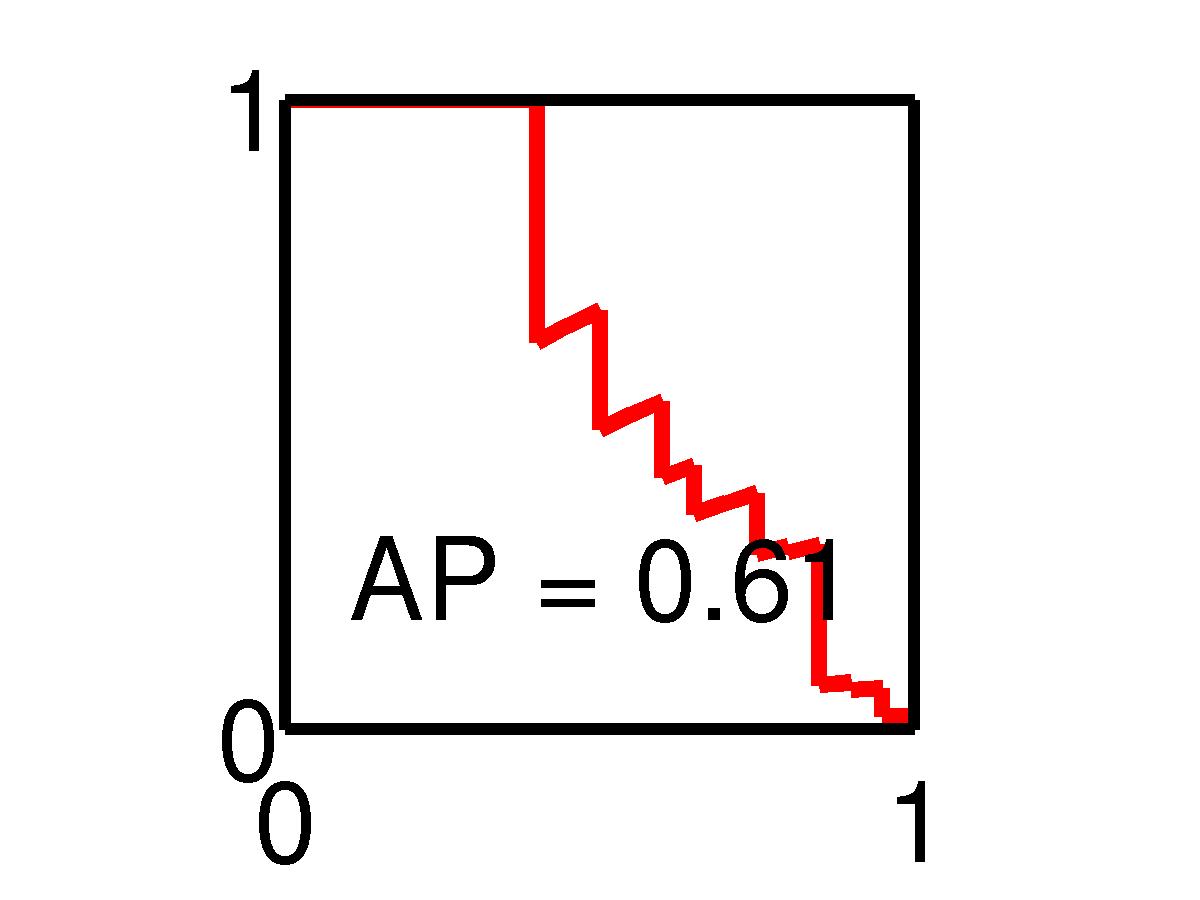

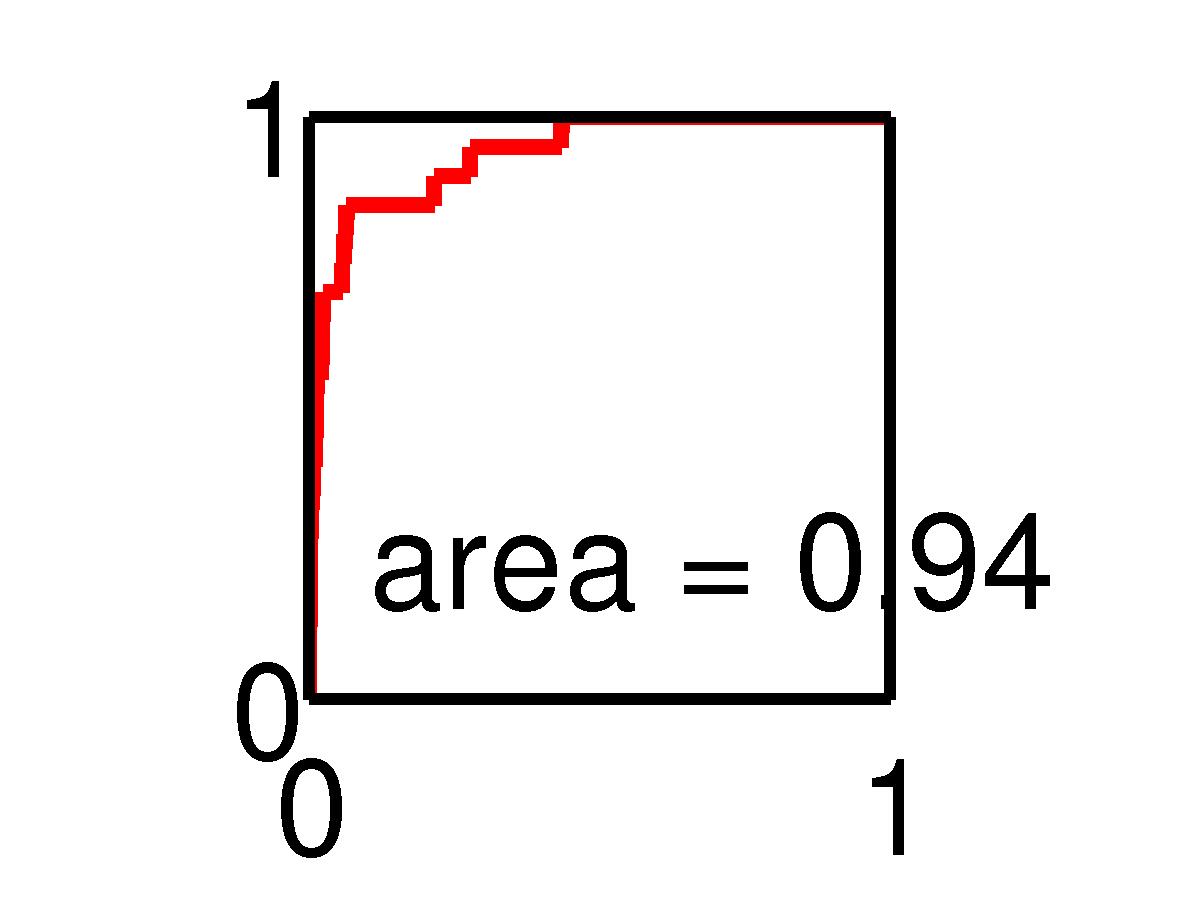

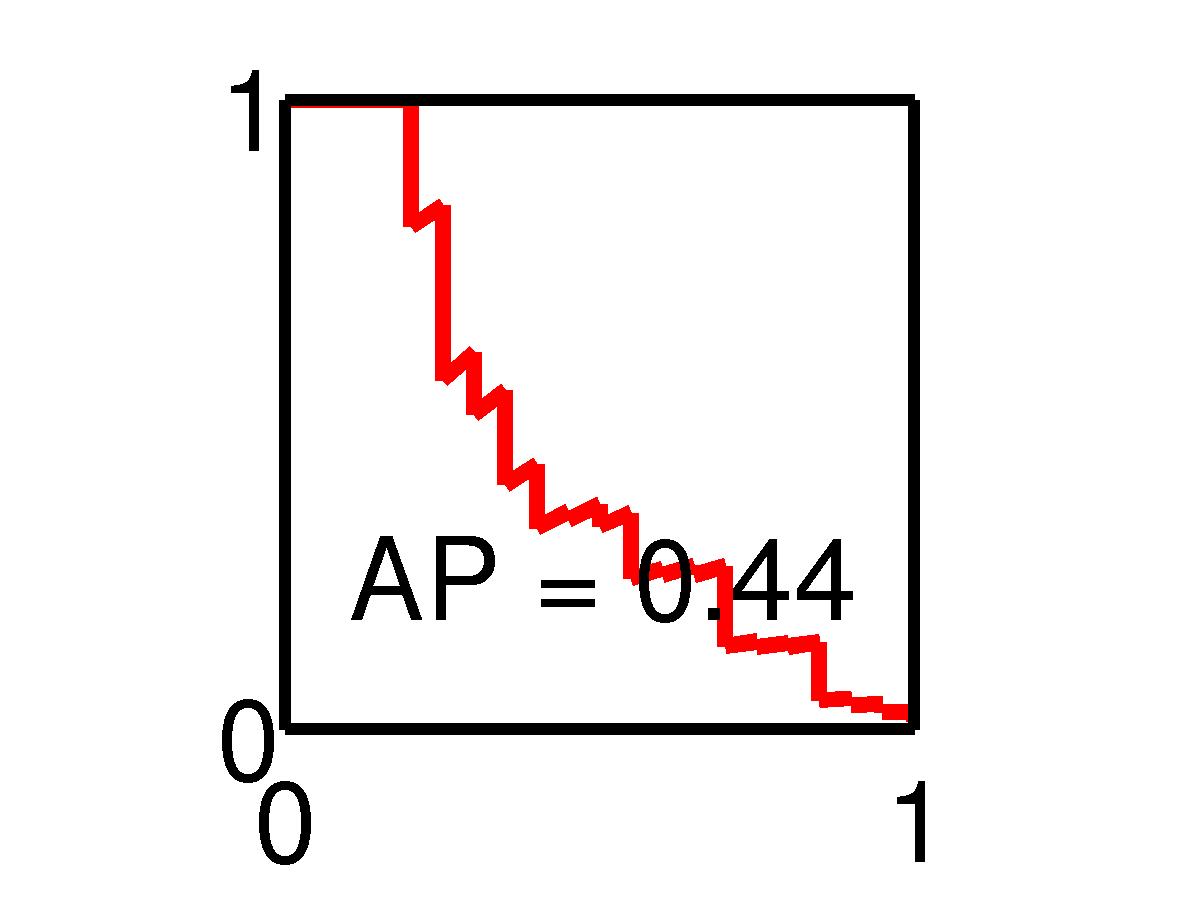

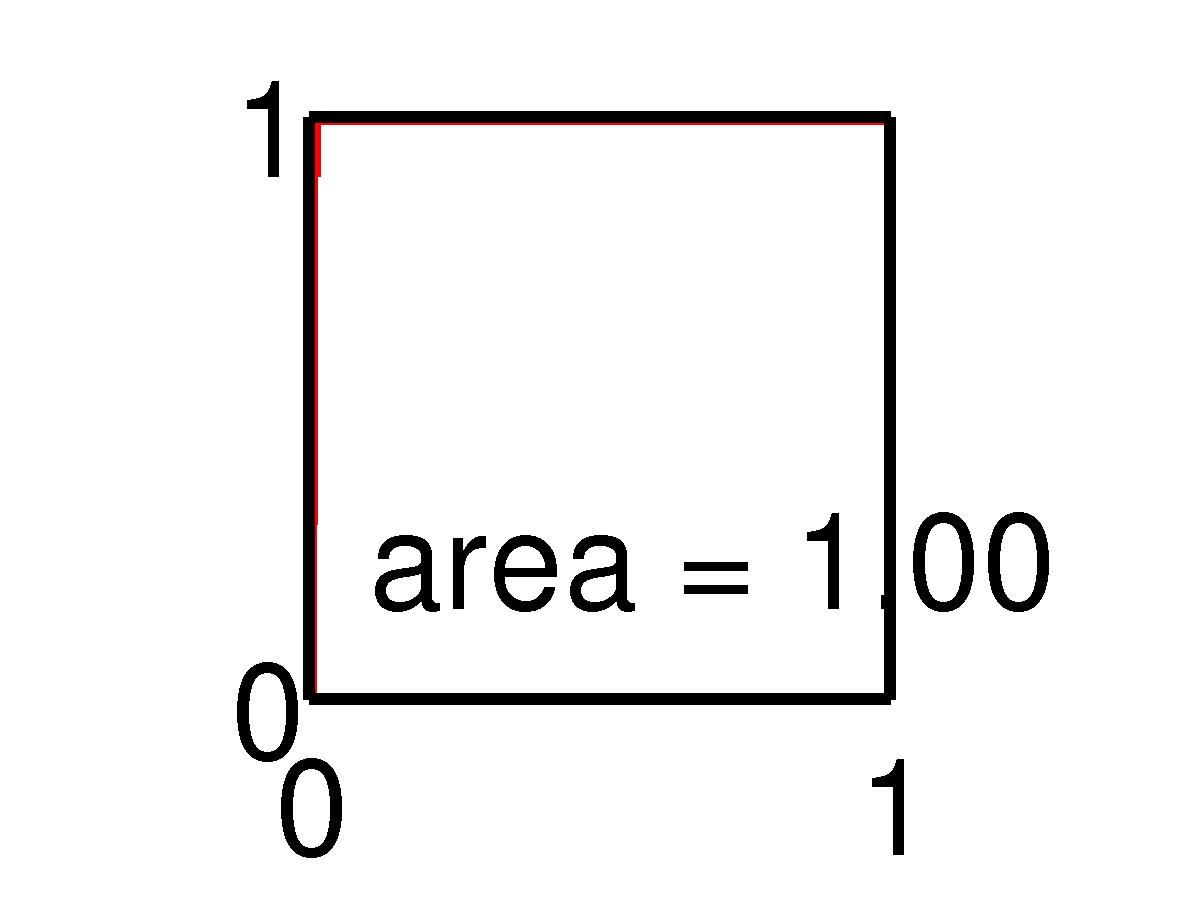

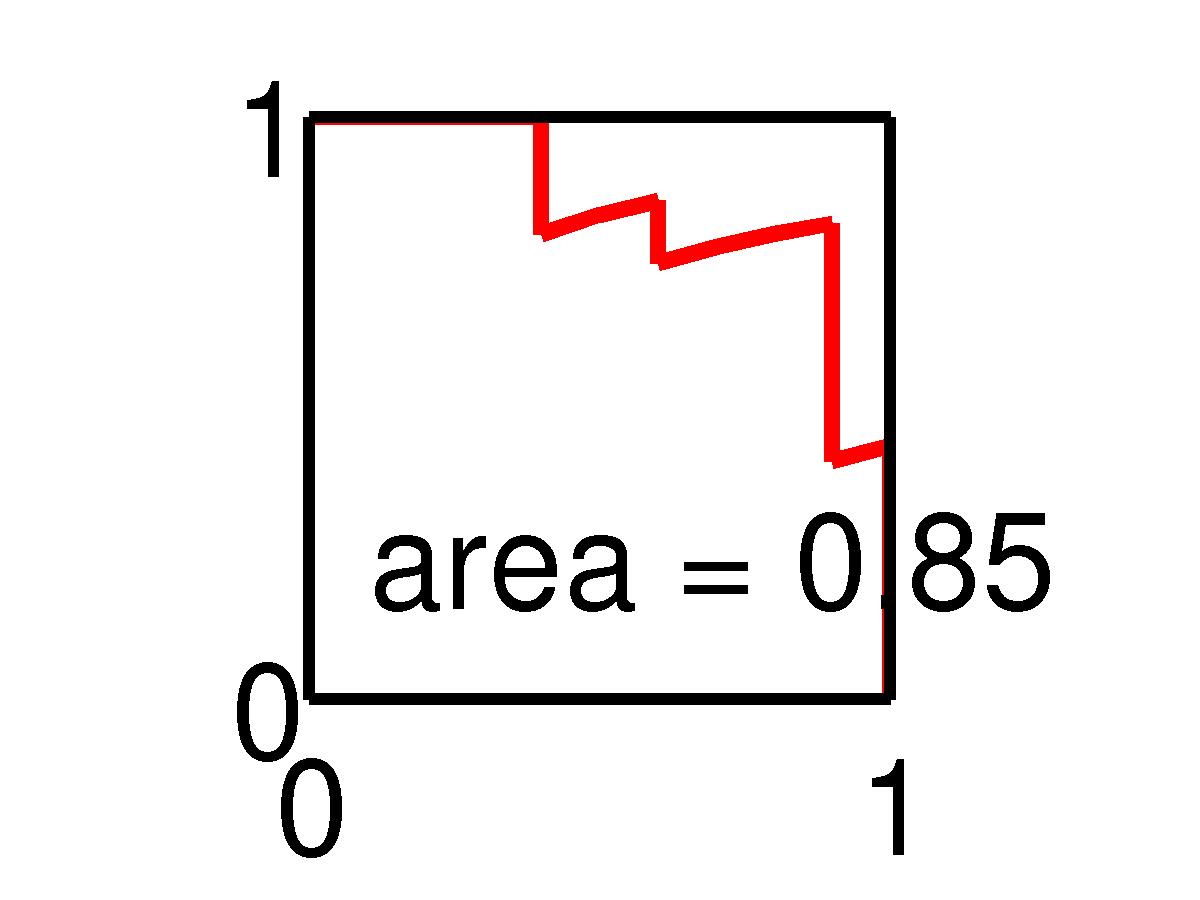

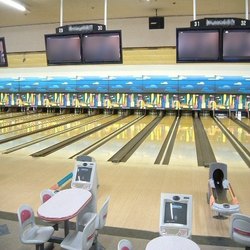

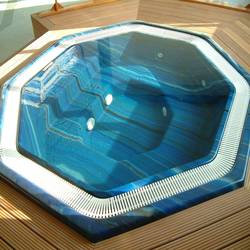

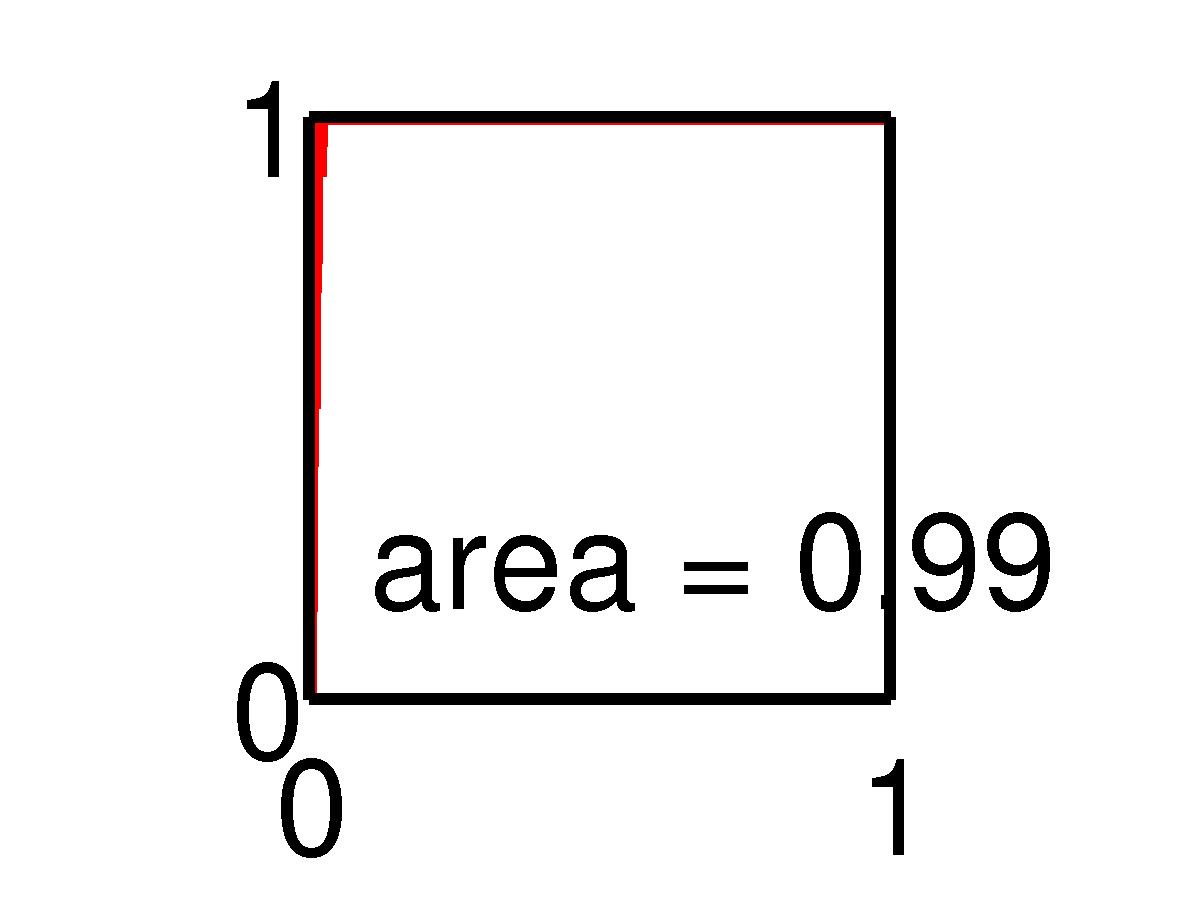

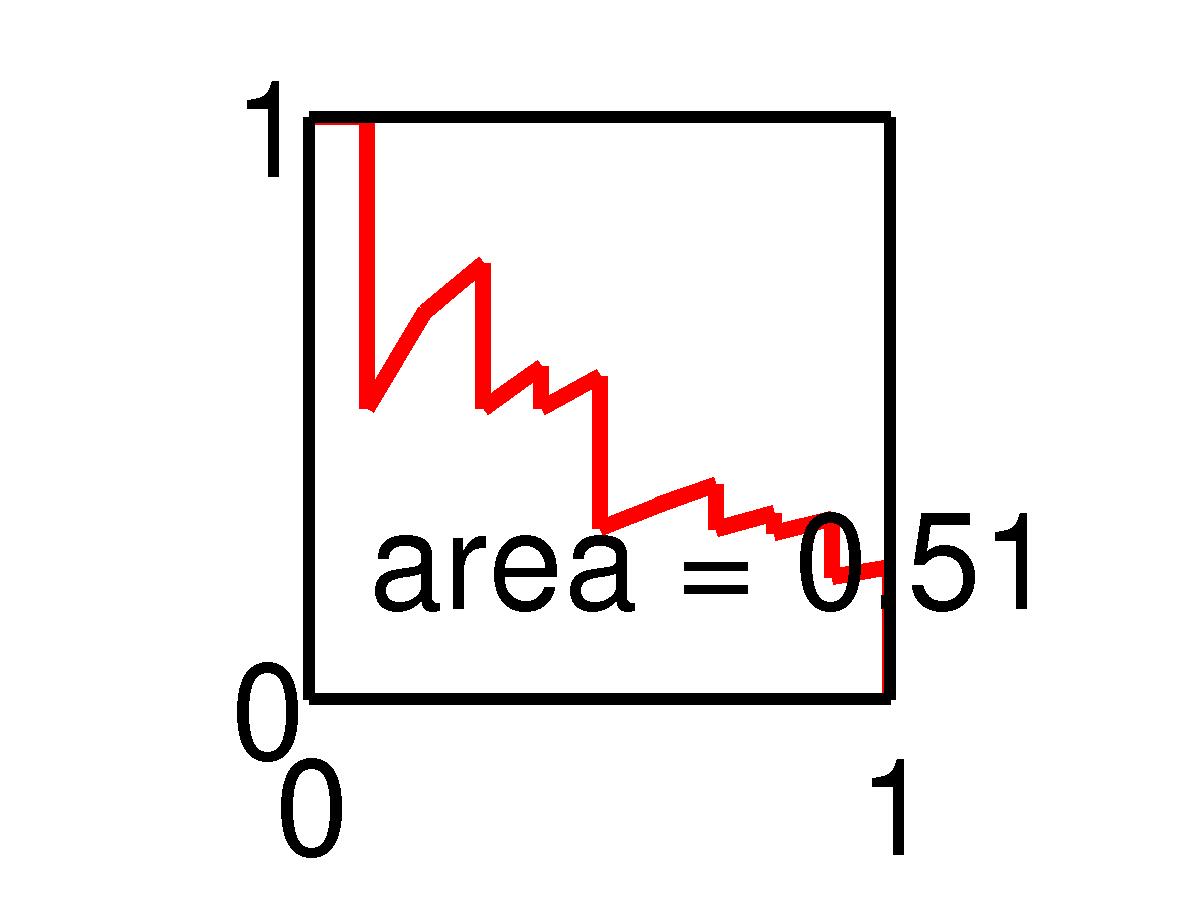

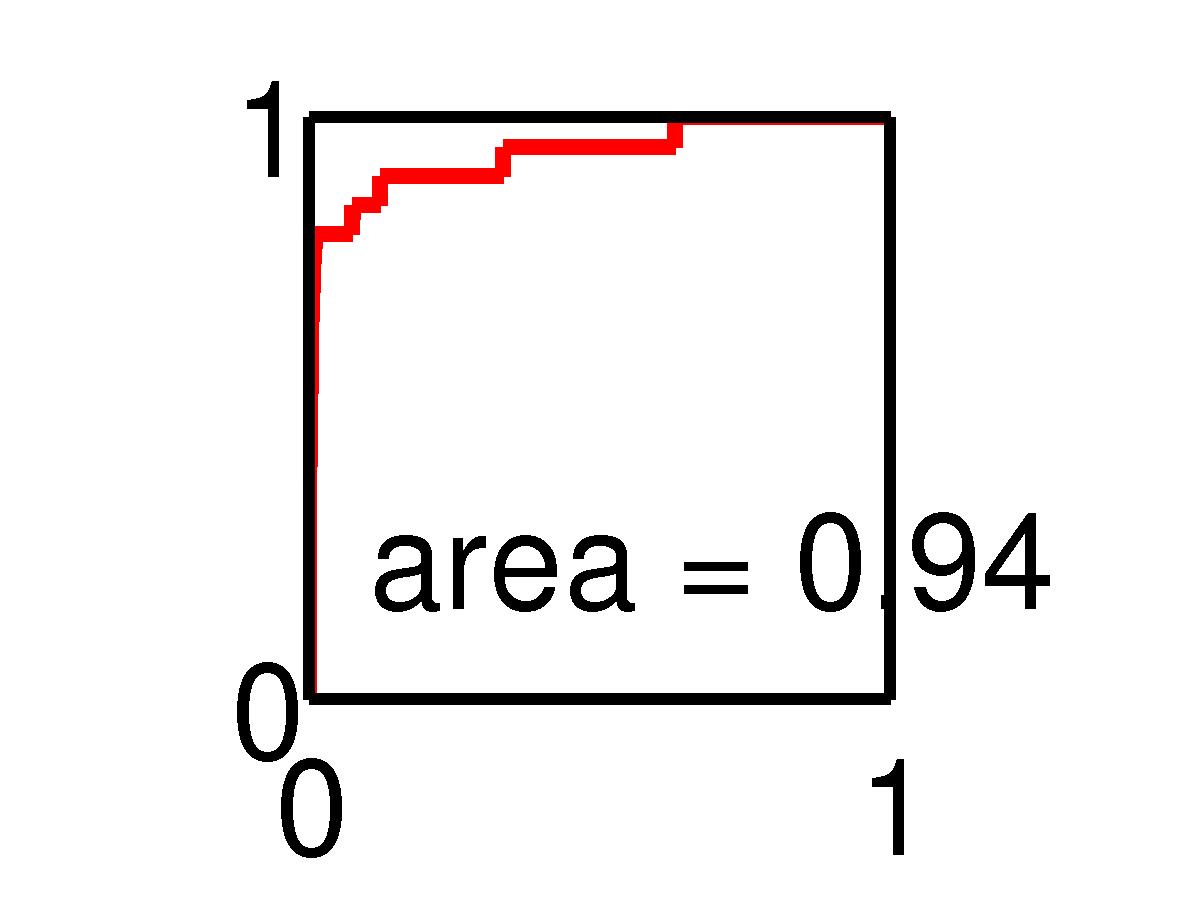

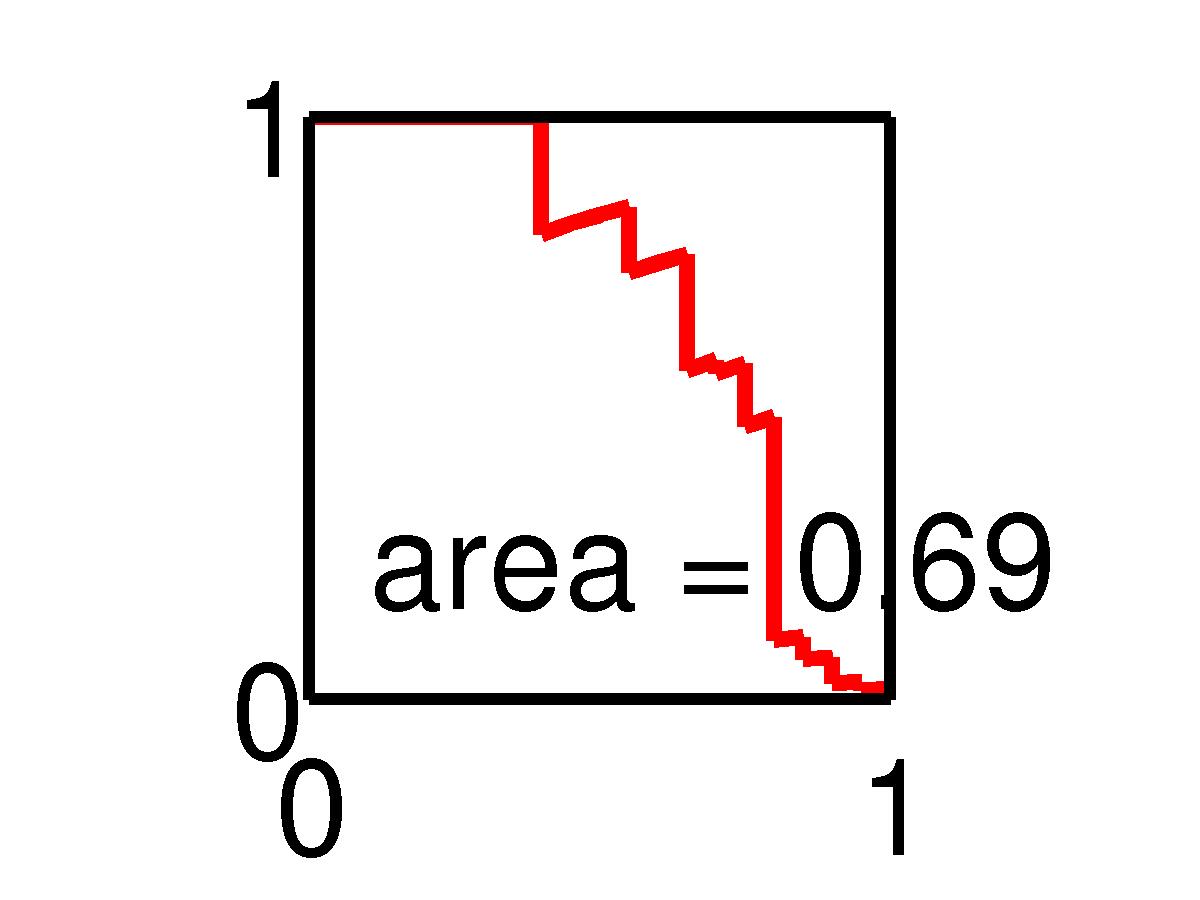

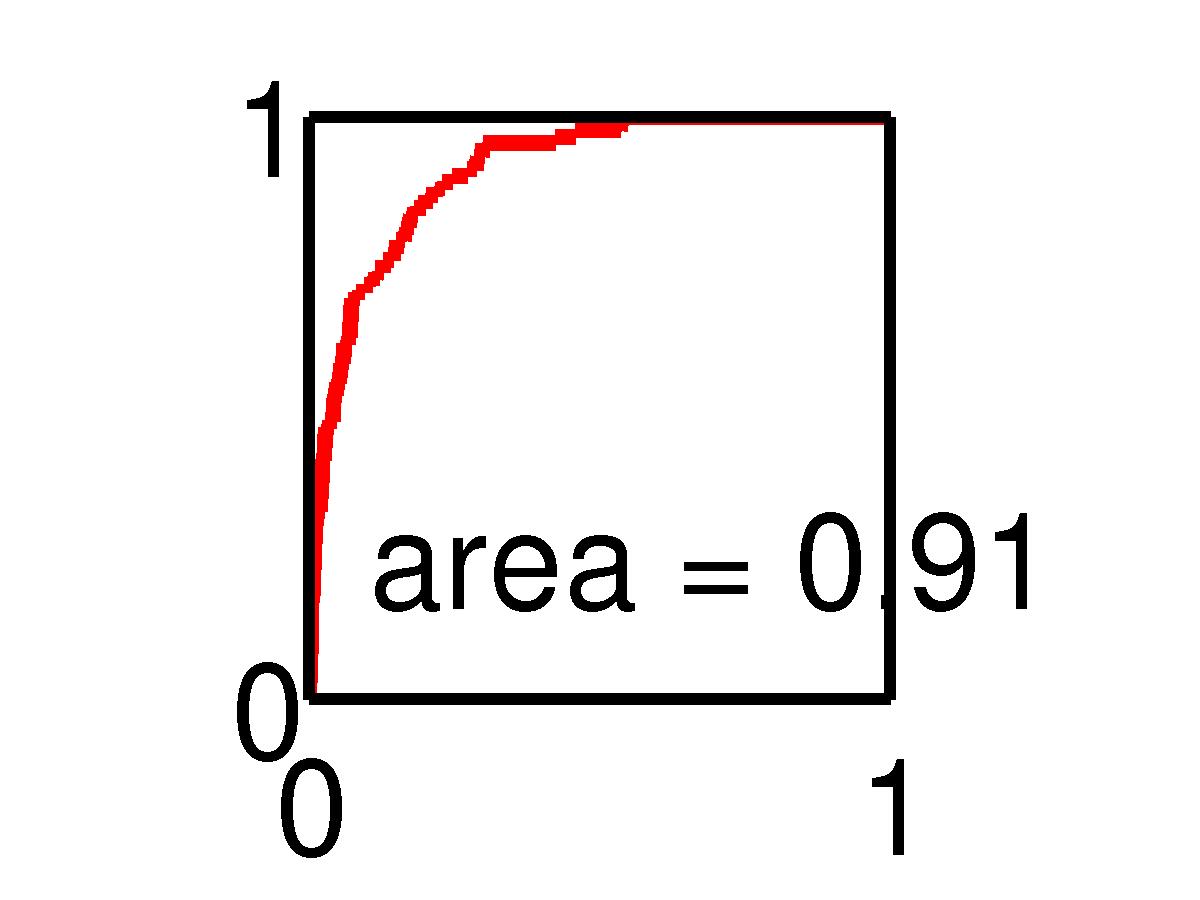

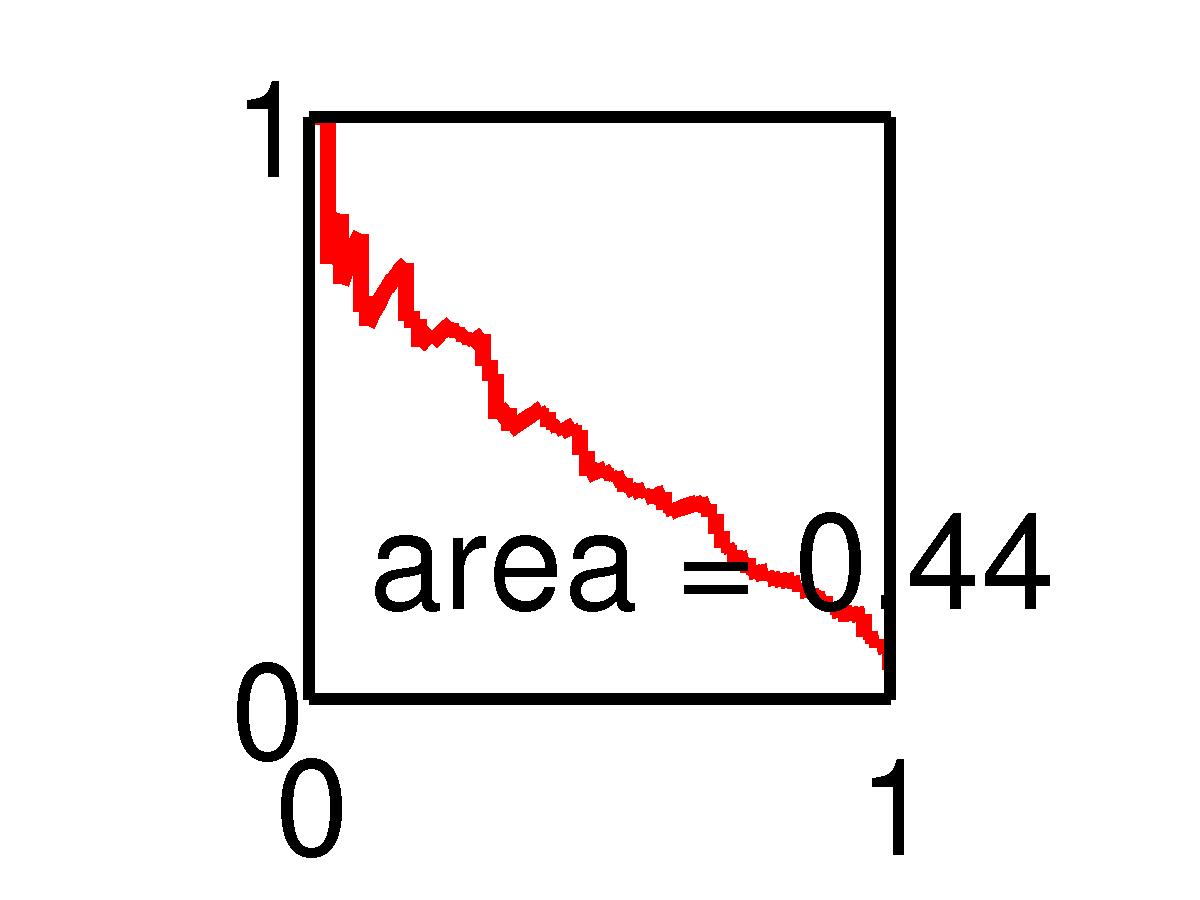

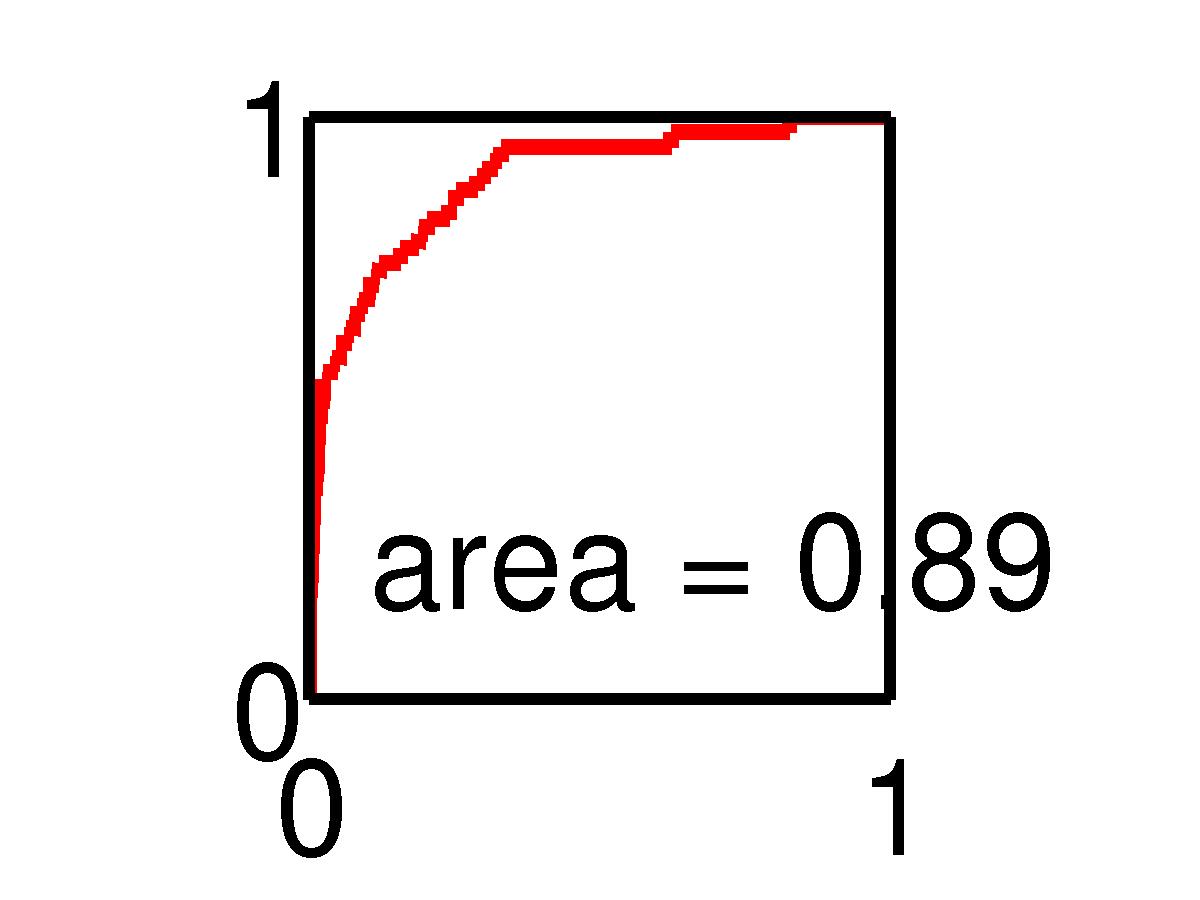

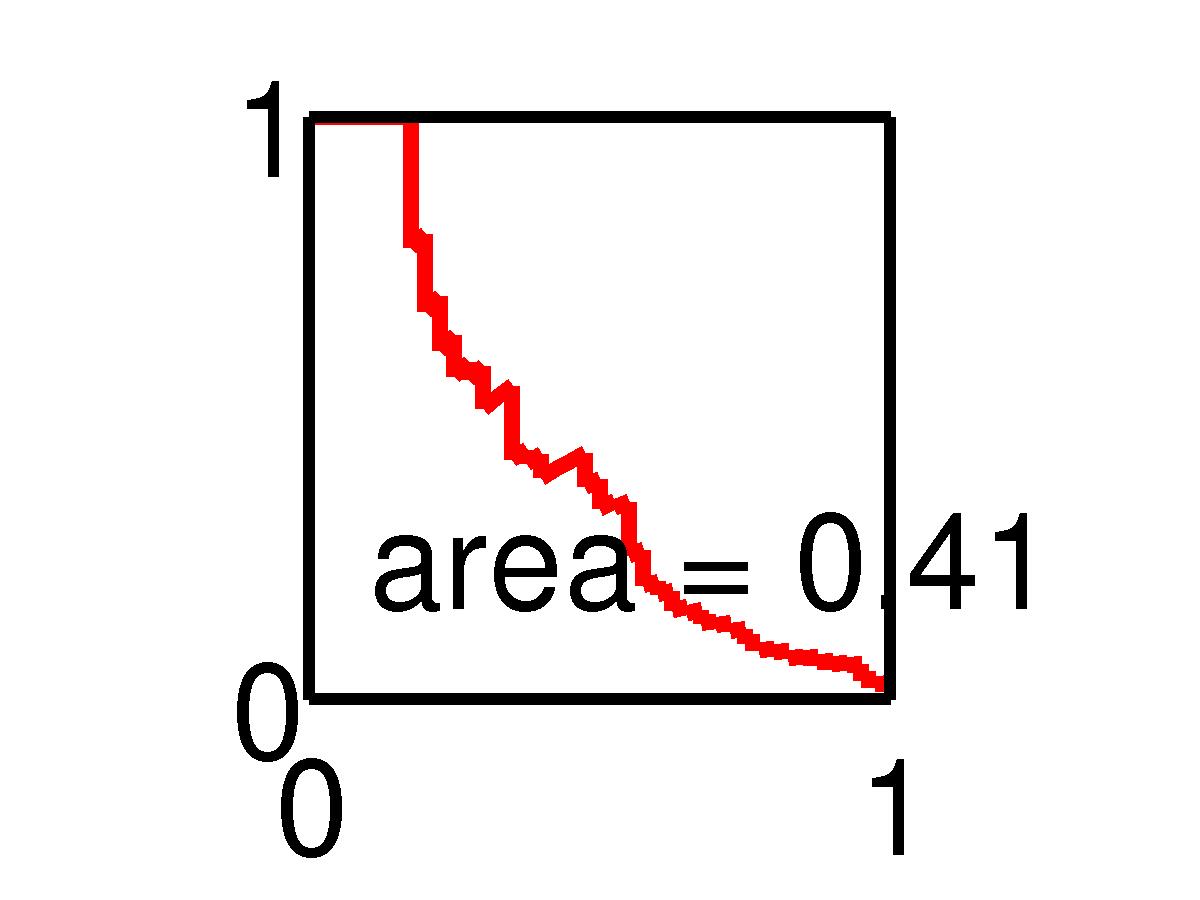

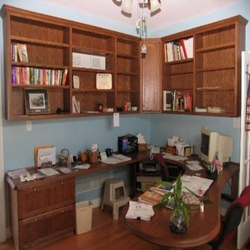

Action Prediction Results

|

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

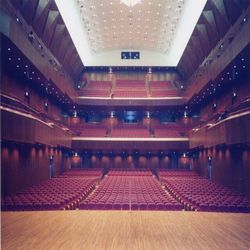

Application I: IGMA - Image based Geo-Mapping of Action

Application II: Dense geo-localized prediction of actions

Acknowledgements

This work is partly funded by ERC Activia, US National Science Foundation grant 1016862, and a Google Research Award to A.O. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation and other funding agencies.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright.