Learning a Text-Video Embedding from Incomplete and Heterogeneous Data

People

Abstract

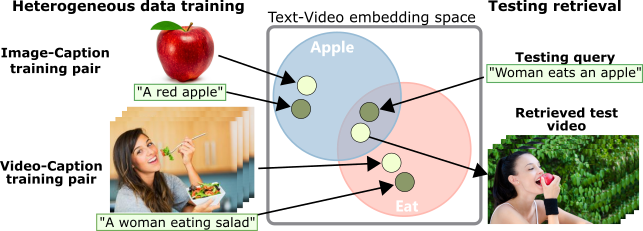

Joint understanding of video and language is an active research area with many applications. Prior work in this domain typically relies on learning text-video embeddings. One difficulty with this approach, however, is the lack of large-scale annotated video-caption datasets for training. To address this issue, we aim at learning text-video embeddings from heterogeneous data sources. To this end, we propose a Mixture-of-Embedding-Experts (MEE) model with ability to handle missing input modalities during training. As a result, our framework can learn improved text-video embeddings simultaneously from image and video datasets. We also show the generalization of MEE to other input modalities such as face descriptors. We evaluate our method on the task of video retrieval and report results for the MPII Movie Description and MSR-VTT datasets. The proposed MEE model demonstrates significant improvements and outperforms previously reported methods on both text-to-video and video-to-text retrieval tasks.

Retrieval web-demo

Retrieval web-demo

Paper

BibTeX

@InProceedings{miech18learning,

author = "Miech, Antoine and Laptev, Ivan and Sivic, Josef",

title = "{L}earning a {T}ext-{V}ideo {E}mbedding from {I}mcomplete and {H}eterogeneous {D}ata",

booktitle = "arXiv",

year = "2018"

}

Code

Github repo: hereAcknowledgements

This work was partly supported by ERC grants Activia (no. 307574) and LEAP (no. 336845), CIFAR Learning in Machines & Brains program and ESIF, OP Research, development and education Project IMPACT No. CZ.02.1.01/0.0/0.0/15 003/0000468 and a Google Research Award.