Synthetic Humans for Action Recognition from Unseen Viewpoints

Abstract

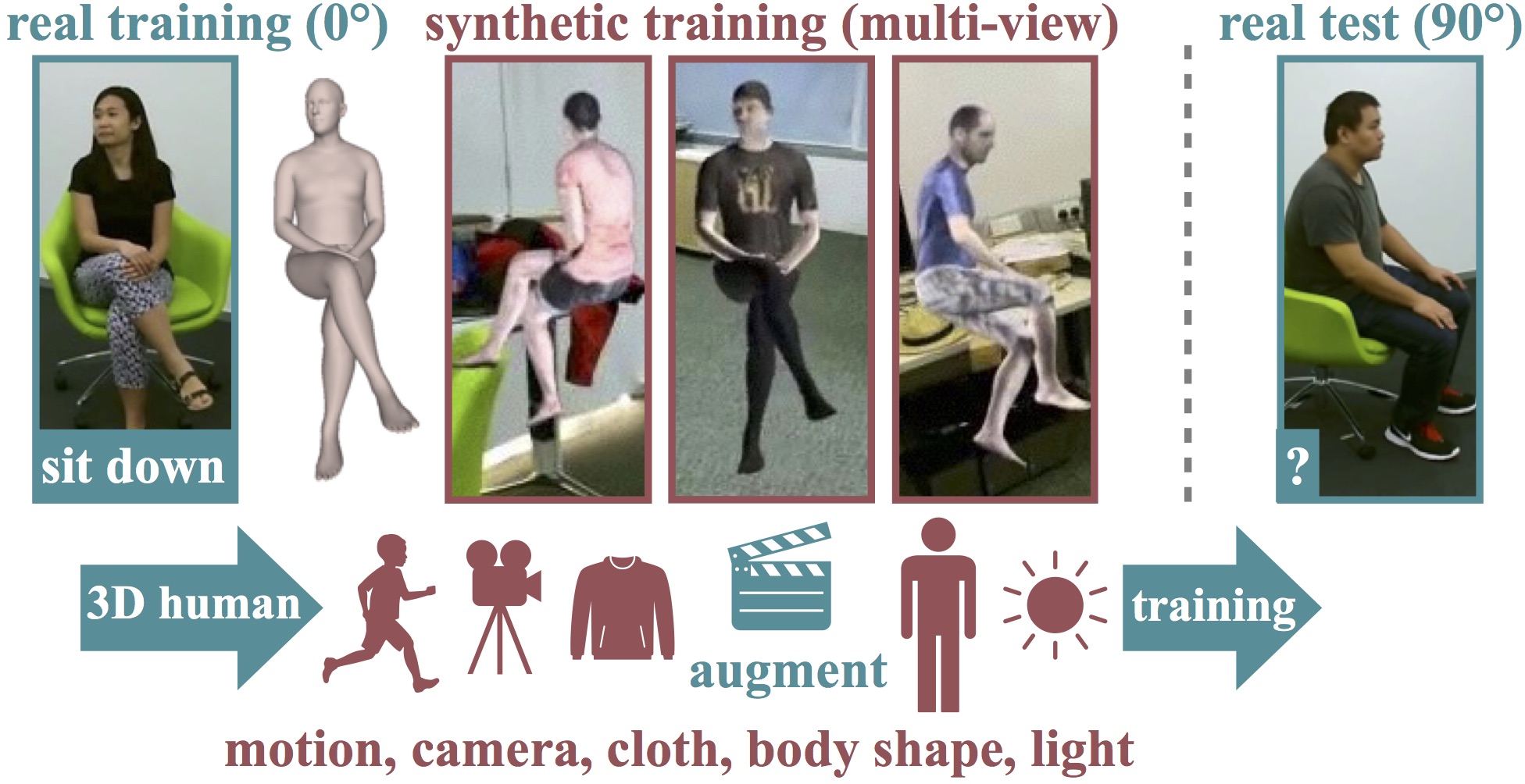

Although synthetic training data has been shown to be beneficial for tasks such as human pose estimation, its use for RGB human action recognition is relatively unexplored. Our goal in this work is to answer the question whether synthetic humans can improve the performance of human action recognition, with a particular focus on generalization to unseen viewpoints. We make use of the recent advances in monocular 3D human body reconstruction from real action sequences to automatically render synthetic training videos for the action labels. We make the following contributions: (i) we investigate the extent of variations and augmentations that are beneficial to improving performance at new viewpoints. We consider changes in body shape and clothing for individuals, as well as more action relevant augmentations such as non-uniform frame sampling, and interpolating between the motion of individuals performing the same action; (ii) We introduce a new data generation methodology, SURREACT, that allows training of spatio-temporal CNNs for action classification; (iii) We substantially improve the state-of-the-art action recognition performance on the NTU RGB+D and UESTC standard human action multi-view benchmarks; Finally, (iv) we extend the augmentation approach to in-the-wild videos from a subset of the Kinetics dataset to investigate the case when only one-shot training data is available, and demonstrate improvements in this case as well.

Paper

- arXiv

- Code & Data

- See also the SURREAL project.

BibTeX

@ARTICLE{varol21_surreact,

title = {Synthetic Humans for Action Recognition from Unseen Viewpoints},

author = {Varol, G{\"u}l and Laptev, Ivan and Schmid, Cordelia and Zisserman, Andrew},

journal = {IJCV},

year = {2021}

}

SURREACT dataset

Below video shows example sequences from our synthetic human videos.

Part (i): synthetic augmentations for each action of the NTU dataset

[at 3 minutes 15 seconds]

Part (ii): synthetic augmentations at 0°, 45°, and 90° for each action of the UESTC dataset

[at 5 minutes 50 seconds]

Part (iii): synthetic augmentations for Kinetics videos

Acknowledgements

This work was supported in part by Google Research, EPSRC grant ExTol, Louis Vuitton ENS Chair on Artificial Intelligence, and DGA project DRAAF. We thank Angjoo Kanazawa, Fabien Baradel, and Max Bain for helpful discussions, Philippe Weinzaepfel and Nieves Crasto for providing pre-trained models.

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright.