Paper

S. Kwak, M. Cho, I. Laptev, J. Ponce, C. Schmid

Unsupervised Object Discovery and Tracking in Video Collections

Proceedings of the IEEE International Conference on Computer Vision (2015)

PDF | Abstract | BibTeX |

Unsupervised Object Discovery and Tracking in Video Collections

Proceedings of the IEEE International Conference on Computer Vision (2015)

PDF | Abstract | BibTeX |

Abstract

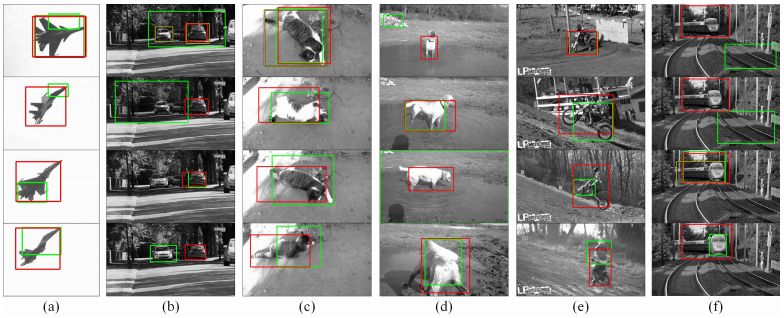

This paper addresses the problem of automatically localizing dominant objects as spatio-temporal tubes in a noisy collection of videos with minimal or even no supervision. We formulate the problem as a combination of two complementary processes: discovery and tracking. The first one establishes correspondences between prominent regions across videos, and the second one associates similar object regions within the same video. Interestingly, our algorithm also discovers the implicit topology of frames associated with instances of the same object class across different videos, a role normally left to supervisory information in the form of class labels in conventional image and video understanding methods. Indeed, as demonstrated by our experiments, our method can handle video collections featuring multiple object classes, and substantially outperforms the state of the art in colocalization, even though it tackles a broader problem with much less supervision.BibTeX

@InProceedings{kwak2015,

author = {Kwak, S. and Cho, M. and Laptev, I. and Ponce, J. and Schmid, C.},

title = {Unsupervised Object Discovery and Tracking in Video Collections},

booktitle = {International Conference on Computer Vision},

year = {2015},

}

Demo videos

| Spotlight video | More example results |