Unsupervised learning from narrated instruction videos

People

- Jean-Baptiste Alayrac

- Piotr Bojanowski

- Nishant Agrawal

- Ivan Laptev

- Josef Sivic

- Simon Lacoste-Julien

Abstract

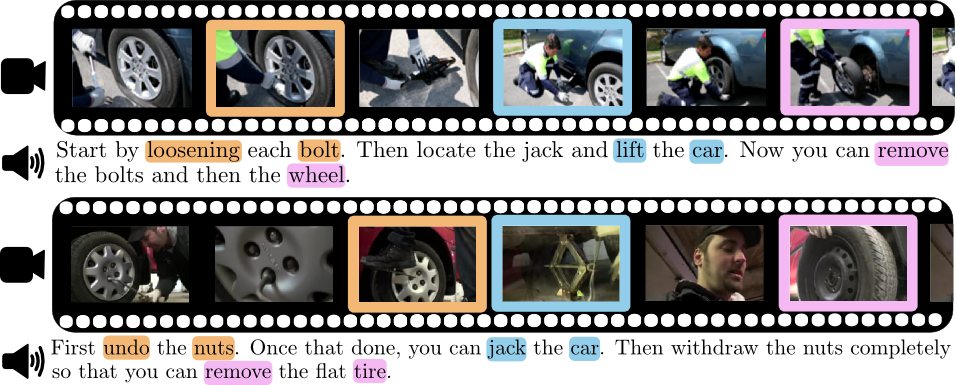

We address the problem of automatically learning the main steps to complete a certain task, such as changing a car tire, from a set of narrated instruction videos. The contributions of this paper are three-fold. First, we develop a new unsupervised learning approach that takes advantage of the complementary nature of the input video and the associated narration. The method solves two clustering problems, one in text and one in video, applied one after each other and linked by joint constraints to obtain a single coherent sequence of steps in both modalities. Second, we collect and annotate a new challenging dataset of real-world instruction videos from the Internet. The dataset contains about 800,000 frames for five different tasks (How to : change a car tire, perform CardioPulmonary resuscitation (CPR), jump cars, repot a plant and make coffee) that include complex interactions between people and objects, and are captured in a variety of indoor and outdoor settings. Third, we experimentally demonstrate that the proposed method can automatically discover, in an unsupervised manner , the main steps to achieve the task and locate the steps in the input videos.

Paper

[paper] [arXiv] [poster][slides]

BibTeX

@InProceedings{Alayrac16unsupervised,

author = "Alayrac, Jean-Baptiste and Bojanowski, Piotr and Agrawal, Nishant and Laptev, Ivan and Sivic, Josef and Lacoste-Julien, Simon",

title = "Unsupervised learning from Narrated Instruction Videos",

booktitle = "Computer Vision and Pattern Recognition (CVPR)",

year = "2016"

}

Talk given at CVPR2016

Video of the project

This video presents our results of automatically discovering the scenario for the two following task : changing a tire and performing CardioPulmonary Resuscitation (CPR). At the bottom of the videos, there are three bars. The first one corresponds to our ground truth annotation. The second one corresponds to our time interval prediction in video. Finally the third one corresponds to the constraints that we obtain from the text domain. On the right, there is a list of label. They corresponds to the label recovered by our NLP method in an unsupervised manner.

Code

To run the code, you will need the following data (see direct download script in the README of the GitHub page):[NLP results][NLP features][VISION results][VISION features]

Data

ERRATUM 1: there were some mistakes in the initial archive below, those have been corrected:- The text files that are giving infos about videos (fps problem):

- Video changing_tire_0009.mpg did not have the correct fps. Please use this video instead:

ERRATUM 2: The manual subtitles for cpr are incorrect (some files are not the correct ones used in the paper), please use this ones instead:

Note that the framerate given in the annotations files (*.xgtf) should not been trusted, instead look at the ones given in the txt files for the task.

Acknowledgements

This research was supported in part by a Google research award and the ERC grants VideoWorld (no. 267907), Activia (no. 307574) and LEAP (no. 336845).